ChatGPT users have been left concerned after the company’s owner made a worrying admission about the ‘sensitive’ things you may be telling the AI bot.

Now, we’re all a little bit guilty of being slightly too open and honest with artificial intelligence like ChatGPT.

From using it for simple questions that could also be solved with a Google search, to asking for real-life advice, people have found a varied array of uses for the model.

Some have even gone as far as substituting the AI bot for a therapist or safe space to speak about your troubles - the most Gen Z thing ever, we know.

Advert

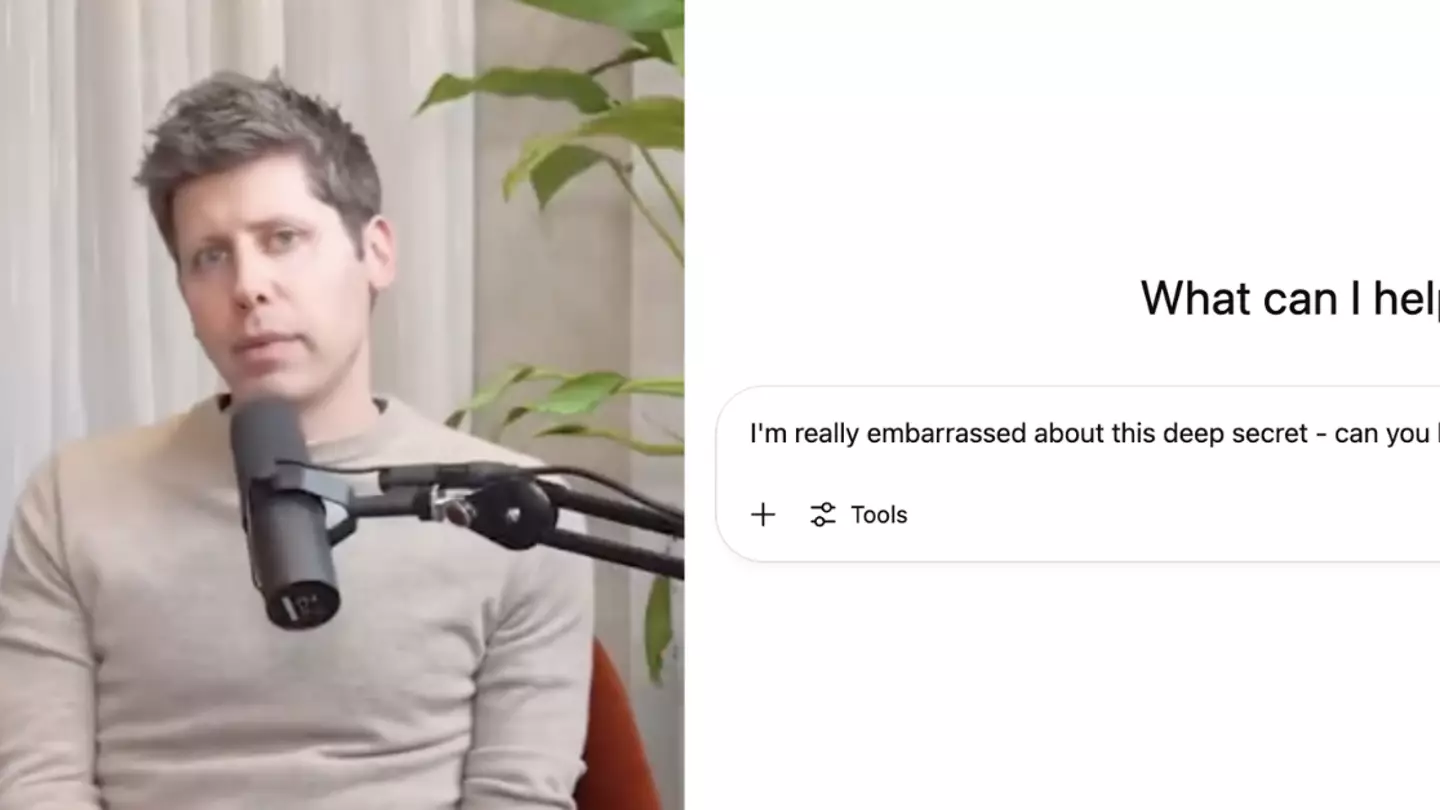

But you might not want to be quite so giving when it comes to your personal information after you’ve heard this warning from the CEO of OpenAI, Sam Altman.

During a podcast appearance this week on This Past Weekend with Theo Von, the entrepreneur talked about the pros and cons of developing AI so rapidly and how these new technologies will change our lives.

He also talked about the overlap between humans and machines, including a lot of the ethical debates like what you should and shouldn’t be discussing with AI.

Altman essentially explained that there are certain scenarios where they potentially might need to share your information with others.

He said on the podcast: "So if you go talk to ChatGPT about your most sensitive stuff and then there's like a lawsuit or whatever, we could be required to produce that, and I think that's very screwed up."

Altman admitted: "People talk about the most personal sh** in their lives to ChatGPT.

“People use it - young people, especially, use it - as a therapist, a life coach; having these relationship problems and [asking] ‘what should I do?’

“And right now, if you talk to a therapist or a lawyer or a doctor about those problems, there’s legal privilege for it. There’s doctor-patient confidentiality, there’s legal confidentiality, whatever.”

He added: “And we haven’t figured that out yet for when you talk to ChatGPT.”

The boss insisted that there should be the ‘same concept of privacy for your conversations with AI that we do with a therapist’ and that it should be ‘addressed with some urgency’.

And understandably, people are beginning to freak out about all of the deep, dark secrets they may have shared with their new AI pal.

Others have taken it as an opportunity to poke fun at those who do use ChatGPT for personal reasons.

In the Instagram comments of a post about the news, one user wrote: “He’s talking about illicit activity, not you venting about your ex.”

And a second joked: “Until I find a better therapist it’s ChatGPT.”

Meanwhile, a third pointed out: “This is why it is not always the best option to use ChatGPT as your therapist.”

And a fourth panicked user asked: “Sensitive stuff is so vague. Do you mean illegal stuff? The sensitive stuff I talk about is regarding my feelings.”

“This should have been obvious from the start. It’s free to use, everything you type into it could be subpoenaed or sold,” quipped another social media user.

Elsewhere, others joked: “Don't plan murder with it, got it”, and “Yea probably don't ask it how to bury a body or what does a 9mm gunshot sound like to my neighbors.”

“If you're doing something illegal and you talk to ChatGPT about it, you're not the brightest person in the first place,” someone else brutally chimed in with.

And another shocked Instagram user added: “People talk to ChatGPT about their innermost secrets? That’s crazy.”

Now, maybe you’ll think twice before using AI as a safe space - I know I certainly will.

Topics: ChatGPT, Artificial intelligence, Life, News, World News, Real Life, Sex and Relationships