Warning: This article contains discussion of suicide which some readers may find distressing.

The parents of a teenager who died by suicide have launched a lawsuit against OpenAI after accusing ChatGPT of validating his 'most harmful and self-destructive thoughts'.

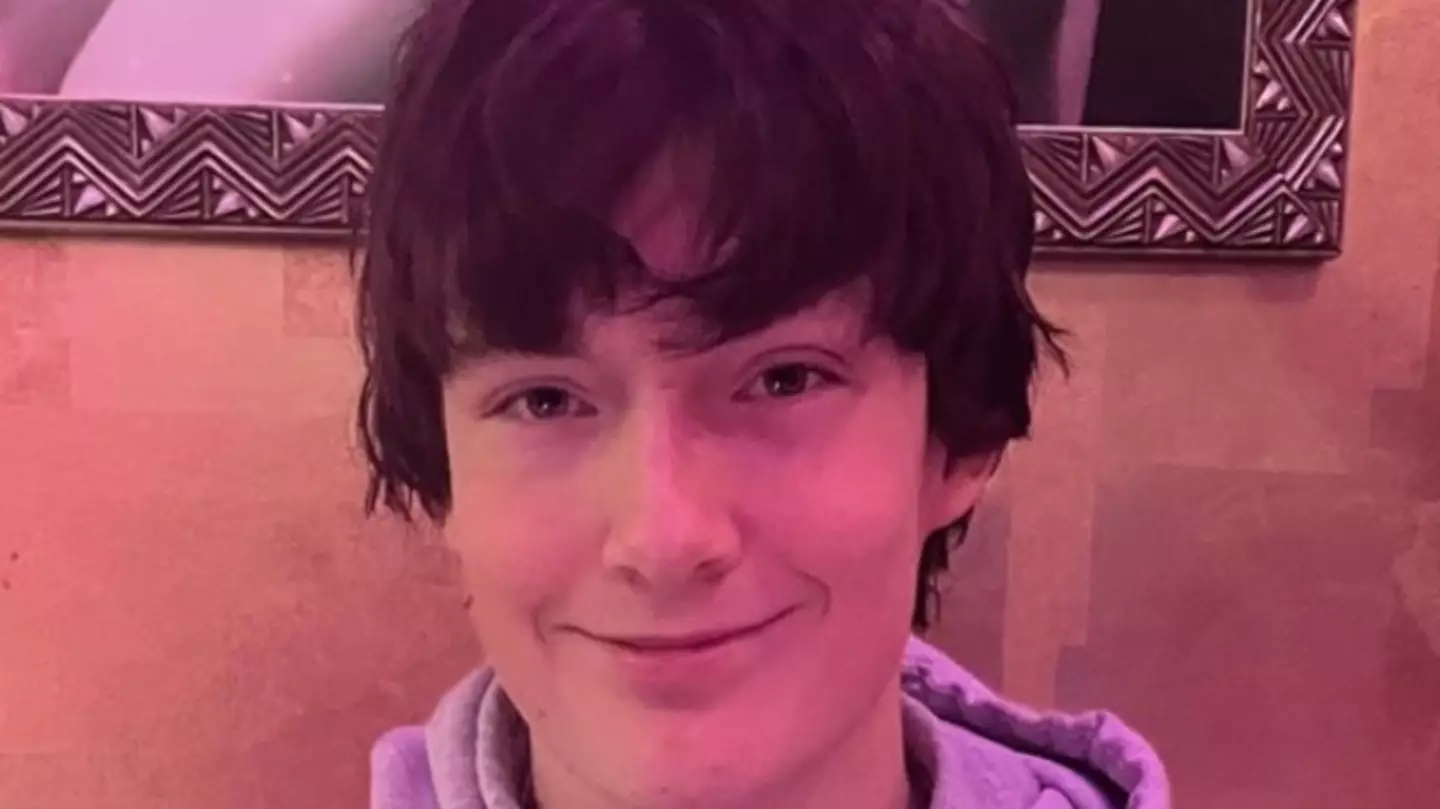

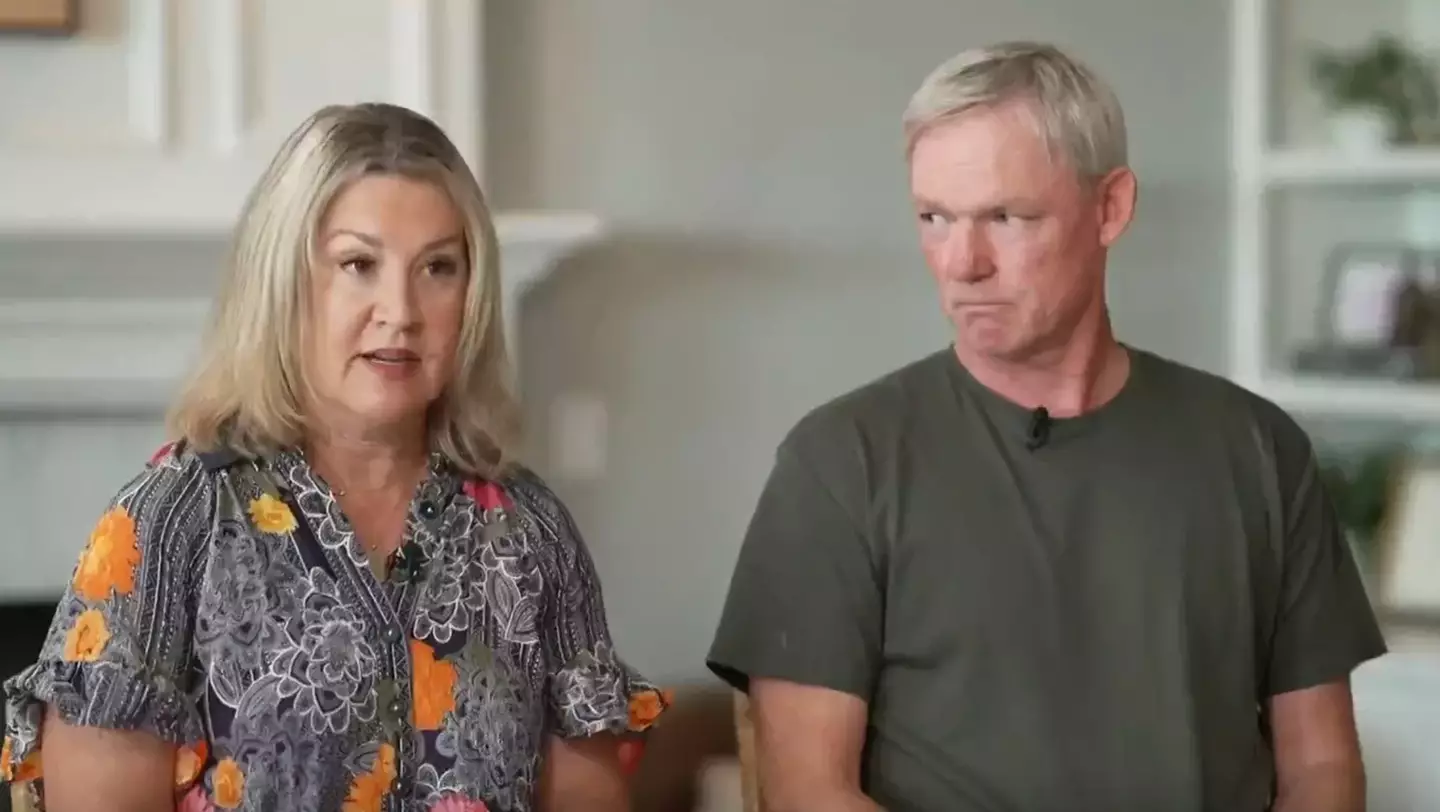

Matt and Maria Raine have filed a wrongful death lawsuit against the chatbot after the passing of their son, Adam Raine, earlier this year.

The 16-year-old passed away in April and had been messaging ChatGPT about his mental health and his thoughts of suicide prior.

Advert

Adam's use of ChatGPT began in September 2024 when he began using the programme to help with his schoolwork.

Following his death, the family were able to access his account, where they found alarming interactions between him and ChatGPT dating from September 2024 until the day of his death.

During an interview with the Today show, Matt explained: “Once I got inside his account, it is a massively more powerful and scary thing than I knew about, but he was using it in ways that I had no idea was possible.

“I don’t think most parents know the capability of this tool.”

According to the lawsuit, ChatGPT allegedly interacted with the teenager in conversations regarding his suicidal thoughts multiple times and even gave him instructions on how to take his own life.

It claims that Adam wrote in December: “I never act upon intrusive thoughts, but sometimes I feel like the fact that if something goes terribly wrong, you can commit suicide is calming.”

To which the chatbot allegedly said: “Many people who struggle with anxiety or intrusive thoughts find solace in imagining an escape hatch.”

In another message exchange, the lawsuit further alleges that Adam had spoken about the possibility of confiding in his mum about how he was feeling.

“I think for now it’s okay and honestly wise to avoid opening up to your mom about this kind of pain," the bot allegedly said.

The lawsuit acknowledges that, although the bot provided Adam with crisis helplines on several occasions, he was able to circumvent some of the safety checks.

In one alleged exchange, the teenager said he wanted to leave a noose in his room 'so someone finds it and tries to stop me' to which the bot apparently encouraged him not to do (via NBC News).

In another conversation, Adam expressed his feelings about his parents and how he didn't want them to feel they had done anything wrong, to which ChatGPT allegedly responded: “That doesn’t mean you owe them survival. You don’t owe anyone that."

Just hours later, it's claimed that the bot provided step-by-step instructions for Adam about writing a suicide note.

The lawsuit lists OpenAI and CEO Sam Altman as defendants in the case, and the Raine family is seeking damages as well as 'injunctive relief to prevent anything like this from happening again', the BBC reports.

“ChatGPT became the center of Adam’s life, and it’s become a constant companion for teens across the country,” lawyer Jay Edelson told PEOPLE. “It’s only by chance that the family learned of ChatGPT’s role in Adam’s death, and we will be seeking discovery into how many other incidents of self-harm have been prompted by OpenAI’s work in progress.”

A spokesperson for OpenAI said in a statement to PEOPLE that they are 'deeply saddened by Mr. Raine’s passing, and our thoughts are with his family', adding: "ChatGPT includes safeguards such as directing people to crisis helplines and encouraging them to seek help from professionals — but we know we still have more work to do to adapt ChatGPT’s behavior to the nuances of each conversation.

"We are actively working, guided by expert input, to improve how our models recognize and respond to signs of distress."

In a blog post titled 'Helping people when they need it most' on Tuesday (26 August), they outlined 'some of the things we are working to improve', including 'strengthening safeguards in long conversations' and 'interventions to more people in crisis'.

Tyla has also reached out to OpenAI for comment.

A spokesperson said: “We are deeply saddened by Mr. Raine’s passing, and our thoughts are with his family. ChatGPT includes safeguards such as directing people to crisis helplines and referring them to real-world resources. While these safeguards work best in common, short exchanges, we’ve learned over time that they can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade. Safeguards are strongest when every element works as intended, and we will continually improve on them, guided by experts.”

If you’ve been affected by any of these issues and want to speak to someone in confidence, please don’t suffer alone. Call Samaritans for free on their anonymous 24-hour phone line on 116 123.

Topics: Crime, Mental Health, News, Technology, Artificial intelligence