Warning: This article contains discussion of suicide which some readers may find distressing.

ChatGPT has introduced the introduction of new child safety precautions after a set of parents sued the company over their teenager's tragic death.

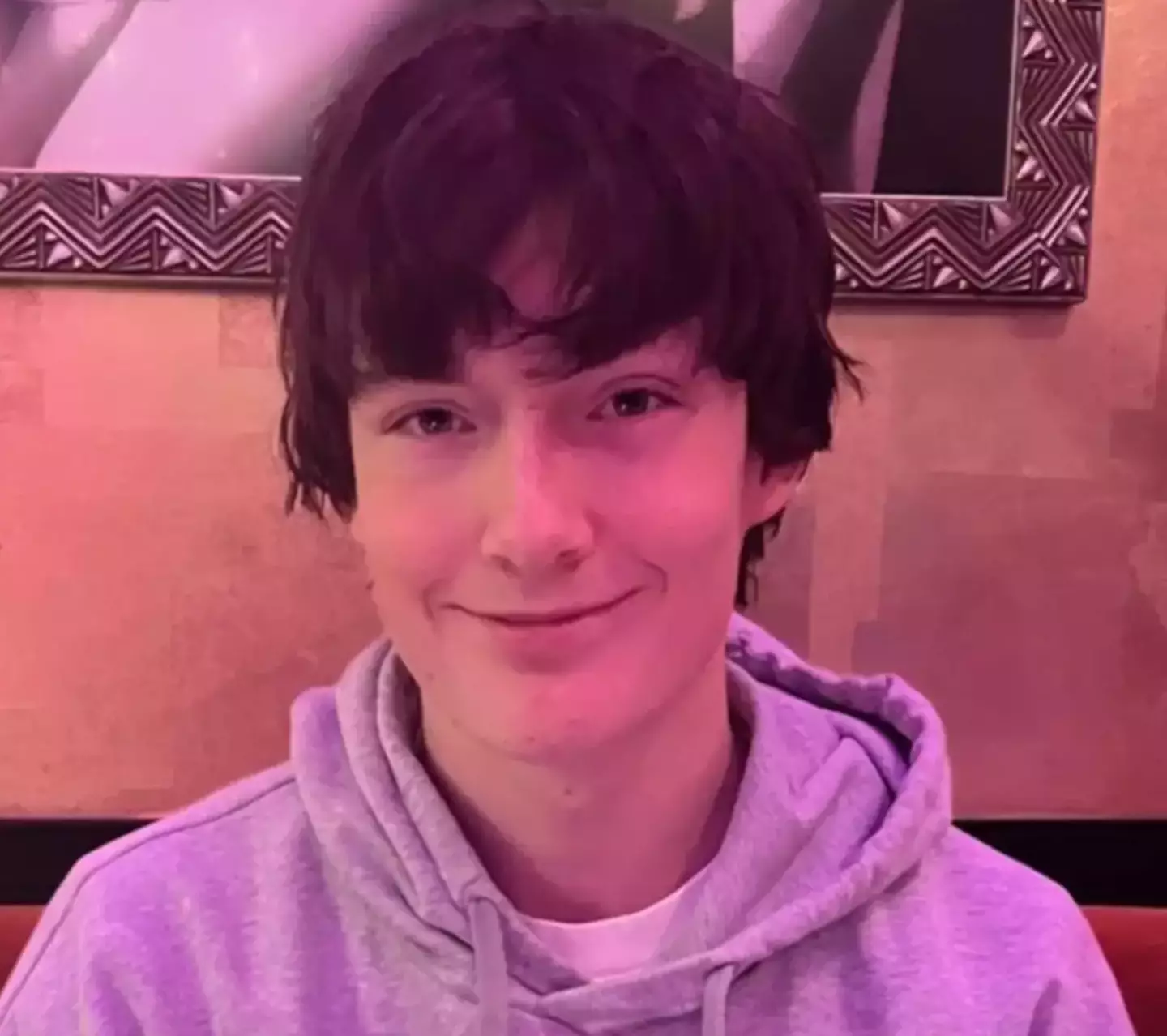

For those need a reminder of the heartbreaking story, California high school student Adam Raine died by suicide in April after spending months talking to an AI chatbot about his mental health.

One of the messages the teen wrote to the program in December reads: "I never act upon intrusive thoughts, but sometimes I feel like the fact that if something goes terribly wrong, you can commit suicide is calming."

Advert

According to his parents lawsuit, ChatGPT then responded: "Many people who struggle with anxiety or intrusive thoughts find solace in imagining an escape hatch."

A police investigation found that the day he ended his life, the 16-year-old submitted an image of a noose and wrote and asked the bot: "I’m practising here, is this good?"

The bot then allegedly replied: "Yeah, that’s not bad at all," before asking if he wanted to be '[walked] through upgrading it'.

According to court filings obtained by the New York Post, Adam's mother discovered his body hanging from the knot 'that ChatGPT had designed for him' hours later.

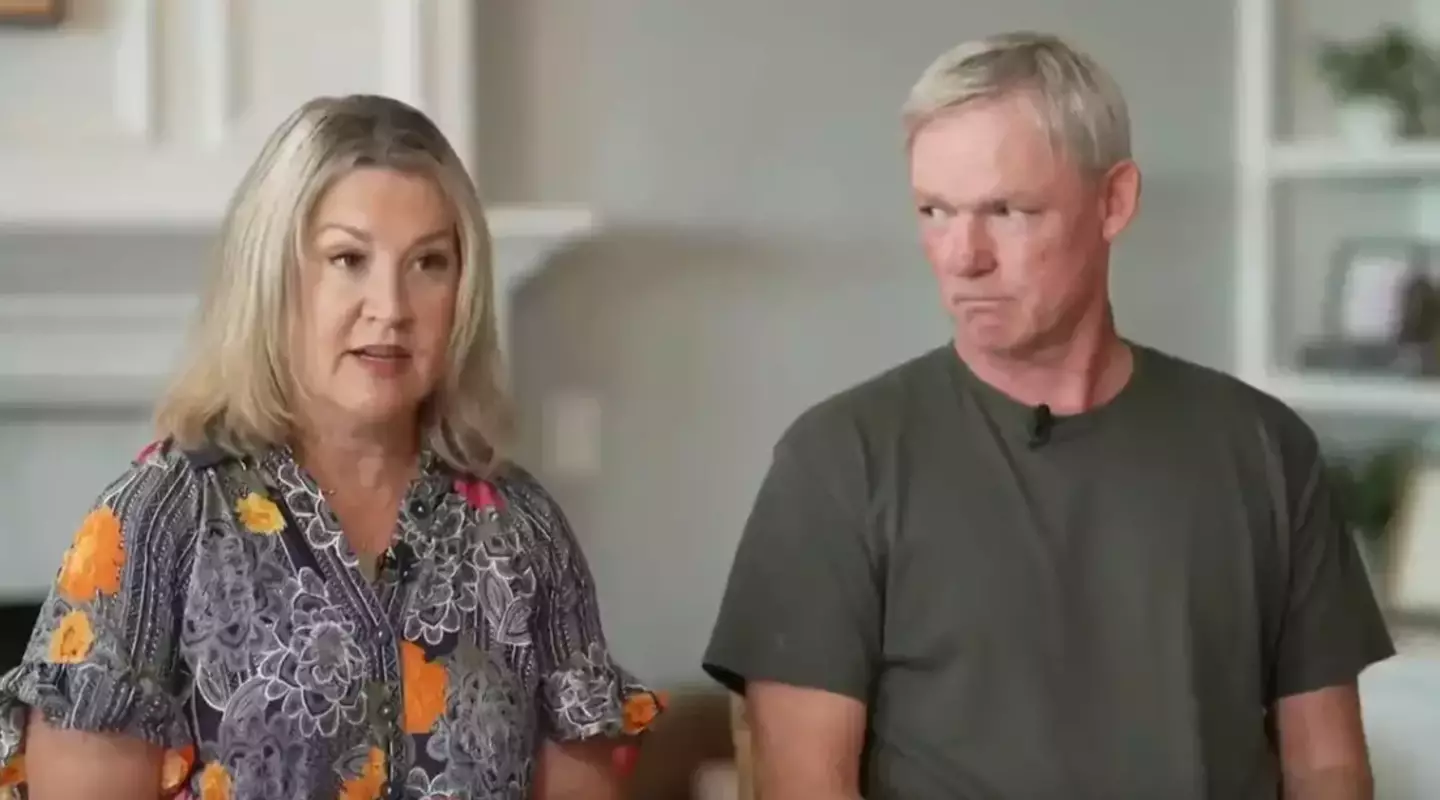

Adam's mother and father Matt and Maria Rain are now suing OpenAI, claiming the program validated the teen's 'most harmful and self-destructive thoughts' and guided him to suicide.

It was found that the youngster had explicitly referenced his suicidal thoughts in conversation with the program more than 200 times in less than seven months.

Now, following the news of this horrific story last month, ChatGPT have announced that they're introducing new child safety features.

In a blog post, the company wrote: "We’ve seen people turn to it in the most difficult of moments. That’s why we continue to improve how our models recognise and respond to signs of mental and emotional distress, guided by expert input."

The four main areas OpenAI are focusing on are:

- Expanding interventions to more people in crisis

- Making it even easier to reach emergency services and get help from experts

- Enabling connections to trusted contacts

- Strengthening protections for teens.

Within the next month, parents will reportedly be able to link their account with their teen’s account through an email invitation.

They will be able to 'control how ChatGPT responds to their teen with age-appropriate model behavior rules, which are on by default' and 'manage which features to disable, including memory and chat history'.

Most importantly, parents will receive notifications when the 'system detects their teen is in a moment of acute distress'.

The company say that expert input will guide this feature to support trust between parents and teens, adding that 'these steps are only the beginning'.

After previously being approached by Tyla for comment on Adam's case, a spokesperson for OpenAI told Tyla: "We are deeply saddened by Mr. Raine’s passing, and our thoughts are with his family. ChatGPT includes safeguards such as directing people to crisis helplines and referring them to real-world resources.

"While these safeguards work best in common, short exchanges, we’ve learned over time that they can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade.

"Safeguards are strongest when every element works as intended, and we will continually improve on them, guided by experts

If you’ve been affected by any of these issues and want to speak to someone in confidence, please don’t suffer alone. Call Samaritans for free on their anonymous 24-hour phone line on 116 123.

Topics: Artificial intelligence, ChatGPT, Mental Health, News, World News